Hi there! This is Nick, and in these monthly articles, I’m sharing my understanding of key concepts in responsible AI and I give you the possibility to participate in my thought process. It’s great for those who have just started caring about responsible and trustworthy AI. But you can also find new inspiration here when you are more experienced.

In the last article, I wrote about why trust matters and how technology or AI can amplify the trust between people and organizations. The concept of trustworthy and responsible AI helps us to utilize AI systems to our benefit, while trying to avoid over-reliance on the technology and potential harms. (You can read into the relevance of trustworthiness in my last article here.)

For that to be true, certain requirements would need to be met and controlled when developing or making use of AI systems under this concept — this is essentially the act of governing AI. But there is one step before that which asks “What characterizes trustworthy AI actually?”. I’ve touched upon the characteristics of trustworthy systems the last time and will continue with them today.

Principles behind trustworthy AI

When you are screening the literature around trustworthy AI systems, you will notice quickly that there has been a lot of research around it. It has been especially motivated by more philosophical and ethical questions on AI. Out of this research, many guidelines — be it from public institutions or private organizations — have been created that try to define and sort the principles behind trustworthy AI. Principles can determine both technical and societal characteristics. Since 2016 the publications of such guidelines heavily increased. The “Ethics Guidelines for Trustworthy AI” by the so-called “High-Level Expert Group on Artificial Intelligence” (HLEG AI), for instance, have had significant influence so far. They have also been the foundation for the EU AI Act, the first major regulation on AI.

In such guidelines, three key drivers of trustworthy AI are usually laid down which are closely related to each other: the law, ethics and the avoidance of harm. From there, various principles can be defined. You could summarize every principle or set of principle into one sentence: Supporting everyone’s well-being.

I’d argue that ethics has a large influence on regulation (as seen by the HLEG AI guidelines and the EU AI Act), and regulatory compliance may be a non-negotiable for trustworthy AI systems. I will come back to AI regulation in another article.

While ethical discussions can be quite abstract and honestly also unsatisfying, they can be helpful to revisit what is really important to us when making use of AI. Ethics can be a good instrument to handle situations in which the law fails or does not exist. But ethical principles also often lack the required enforcement power.

Depending on who you ask and in which context you are in, the definition of ethics varies. This means, the perception of what is a good or a bad action, or what is a trustworthy and harmless AI system, can be different. Ethical principles are therefore especially helpful when considering the responsible usage of AI. However, this does not facilitate finding a consensus on what is considered as the definitive principles of trustworthy AI.

From a more technical perspective, the characteristics of trustworthy AI systems can be less opaque. The guidelines from the HLEG AI are highlighting technical robustness and transparency, for instance, which can be put into specific requirements more clearly. You could say that a trustworthy AI system needs to have a certain degree of quality: it performs as intended under varying conditions, it has a certain level of accuracy, it protects you from outsider attacks, and it is possible to understand the underlying functioning of the system.

Personally, I think it’s important to define some guiding principles when you are developing or using AI systems out of these two reasons: a) they act as a compass to base your decisions on in situations of uncertainty, and b) they will help you to proactively define requirements to actually build or assess trustworthy AI systems. But I also do think that it’s not helpful to spend too much energy on selecting your principles to adhere to, as it is too easy to lose yourself in a weird, theoretical world of definitions in AI ethics.

Gladly and as written above, many have already invested their resources into the research and translation work into both technical and organizational requirements or measures for trustworthy and responsible AI systems. Even the HLEG AI has tried to give readers of their guidelines an assessment list with some more detailed questions to ask yourself.

See the “good resources to continue learning” at the very end of this article to find more guiding material.

Common core principles

As we have thought through how to design an organizational AI policy at my workplace trail, we did spend some time on researching guiding principles, finding these six commonly applied sets of principles of trustworthy AI:

Transparency, Explainability & Auditability: It should be clear how an AI system works and how it came to an output. This also contains the ability to communicate (requested) information to stakeholders.

Security & Safety: Prevent misuse and harm, as well as unauthorized access to AI systems. Data needs to be secure.

Robustness & Reliability: AI systems should perform as intended in varying and also unforeseeable circumstances.

Justice, Equity, Fairness & Non-Discrimination: Bias and discrimination through AI systems should be avoided, and human rights should be upheld.

Privacy: Measures should ensure the confidentiality, integrity, and availability of AI applications and their data, including personal and sensitive information.

Accountability, Responsibility & Liability: AI systems must be subject to human oversight. AI operators need to be held accountable for AI systems and their functioning.

Note that there are many more principles and that this is just a tiny excerpt of grouped principles. If you want to dive deeper into here, you can have a look at this study that reviewed 200 guidelines on AI ethics and governance by Corrêa et al. You can also send me a message if you have a question about the sets of principles above.

And if you are interested in how we implemented these into an organizational AI policy at trail, you can read more in this article.

The need for adaptation and getting into action

As stated, it is difficult to make practical use out of vague principles and statements. When choosing your guiding AI principles, you will need to find your own definitions of them and to adapt them to your context and industry. Just picking those that sound good without properly thinking about the definitions tempts you to align them with already existing business practices or product features — making the choice of principles useless, as you do not meaningfully reflect on your business, as Luke Munn has written.

You should first evaluate what principles are actually needed and what requires special attention when developing or using AI in your organization. Telefónica, for instance, clearly focussed on defining the guiding principles that are unique to AI systems. They have also referred to existing practices within their organization when necessary, or when principles are applicable more generally, but are very relevant to AI as well.

Some principles that you find may be more relevant to public institutions or governments than for private organizations. Or there could be trade-offs between principles in certain situations. Or some are more important when you are developing AI compared to when you are just using third-party AI.

Keep the selection of your principles simple. Focus on the 4-7 most important ones that should guide the development or use of trustworthy and responsible AI in your organization. You may cover others anyway, when you are applying the best practices in AI governance. Use principles rather as a “departure point” for any discussion you have around AI and for defining the most essential, concrete requirements and controls for your systems. The latter will be subject to another article.

Helpful questions to ask yourself when selecting AI principles:

Who wrote these principles?

For what reason?

For whom are they meant to be?

Why should I follow these principles?

How do these fit to my organization? What is my interpretation of them?

A message to AI

Here is how DeepSeek summarizes the characteristics of trustworthy AI:

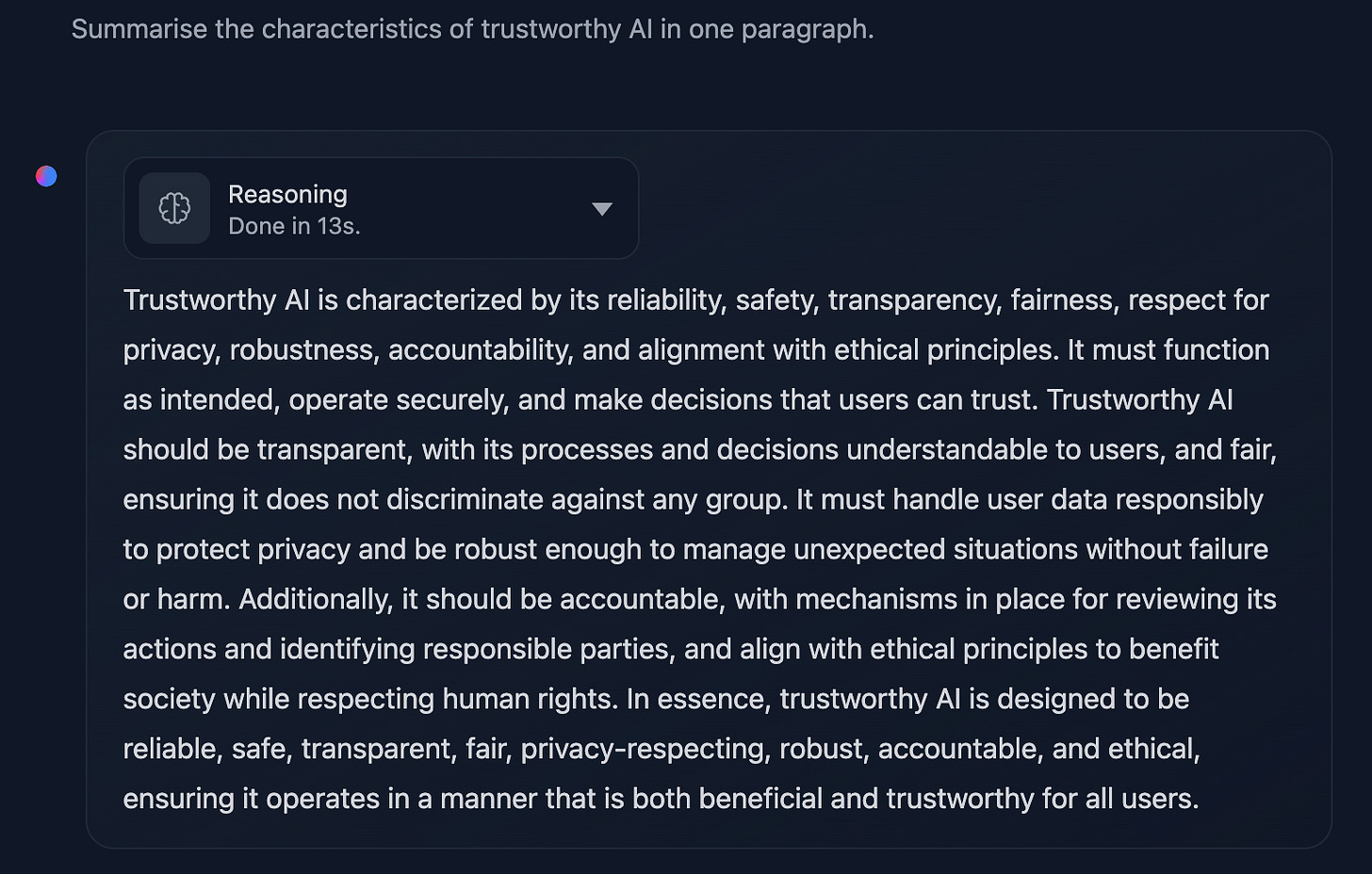

Claude thinks you should use these principles to guide your AI development:

What’s next

What is your take on the characteristics and principles behind trustworthy AI? Have you thought about these in your organization? Let me know by commenting or sending me a mail.

Next up, I will write about regulation in AI and the EU AI Act, and we will slowly progress into the more tangible and actionable side of responsible AI. Subscribe for free if you don’t want to miss out.

— Nick

Connect with me

📚 Good resources to continue learning

The ethics guidelines for trustworthy AI of the High-Level Expert Group on AI / European Commission

“Worldwide AI ethics: A review of 200 guidelines and recommendations for AI governance” by Corrêa et al.

“Implementing Australia’s AI Ethics Principles” from the National AI Centre is a more practical guide on principles

The AI Ethics Lab has an interactive explorer on their website that helps you to compare various guidelines and principles, even from private organizations (such as BMW or IBM)

“Responsible AI by Design in Practice” from Telefónica explains their approach to implementing and practicing their chosen principles

The popular NIST “Artificial Intelligence Risk Management Framework (AI RMF)” also gives some guidance on trustworthy AI characteristics (a bit more technical and aligned with various ISO/IEC standards)

“The uselessness of AI ethics” by Luke Munn is a thought-proving article that explores exactly that: why it does not make sense to choose ethical principles

“Connecting the dots in trustworthy Artificial Intelligence: From AI principles, ethics, and key requirements to responsible AI systems and regulation” by Díaz-Rodríguez et al. is a very interesting paper detailing the aforementioned principles

The recommendations of UNESCO on “Ethics of Artificial Intelligence”

The guide from IEEE on “Ethically Aligned Design” of autonomous and intelligent systems